Update: 2025-11-20

Total Visitors:

Wechat Homepage

Dendritic Learning

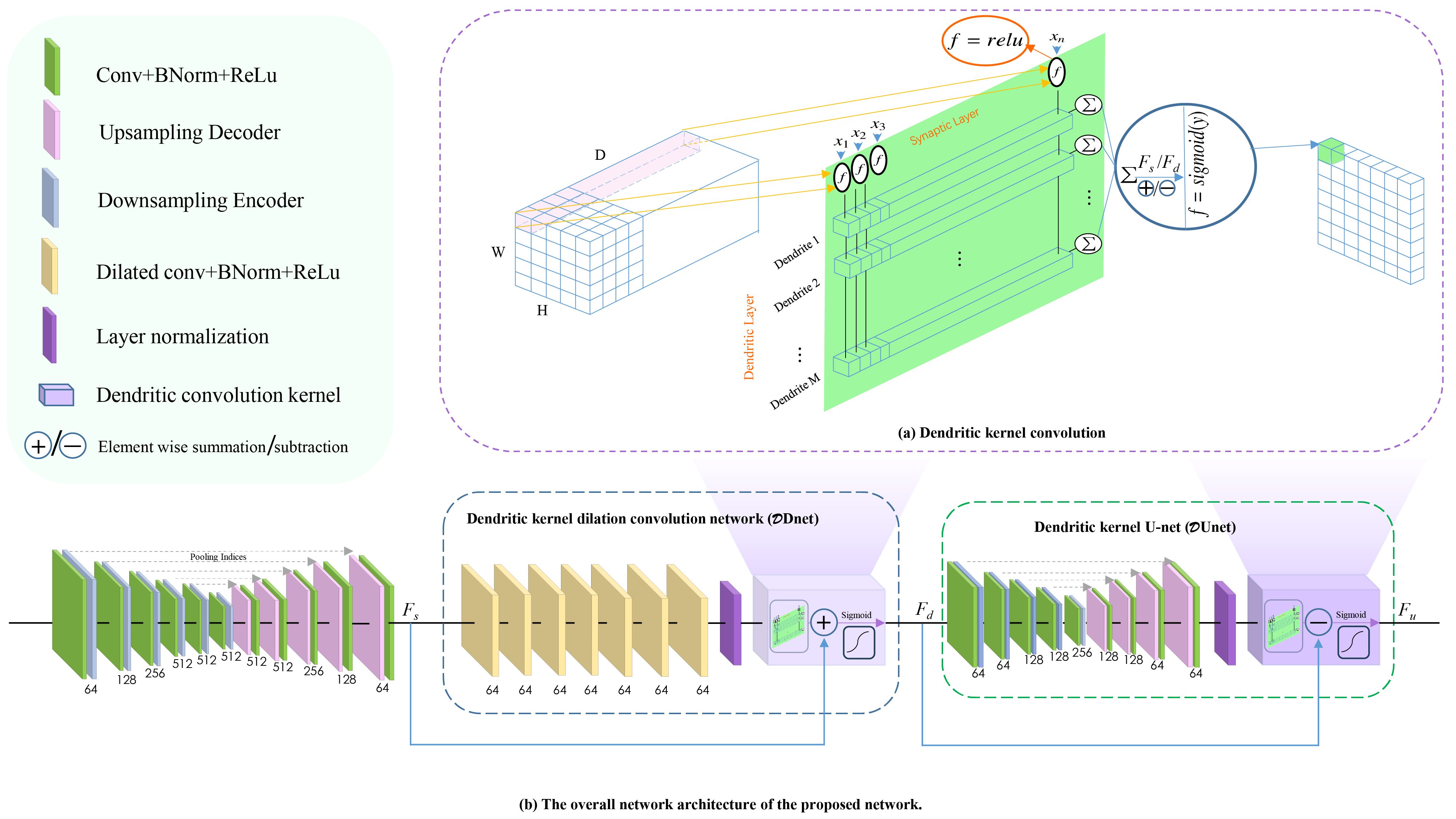

DKNet: Dendritic Kernel Convolutional Neural Network for Breast Ultrasound Images Segmentation

This paper proposes a novel Dendritic Kernel Convolution (D-convolution) module inspired by the nonlinear excitatory and inhibitory mechanisms of biological dendrites. Integrated into a three-stage network (DKNet), it mimics the human visual system’s coarse-to-fine processing for breast ultrasound image segmentation. D-convolution enhances feature representation, suppresses noise, and enables more precise boundary detection by modeling synaptic thresholds and multi-branch dendritic integration. Experimental results on public datasets show that DKNet outperforms state-of-the-art methods in accuracy, robustness, and segmentation quality. These findings demonstrate the potential of biologically inspired architectures for challenging medical imaging tasks.

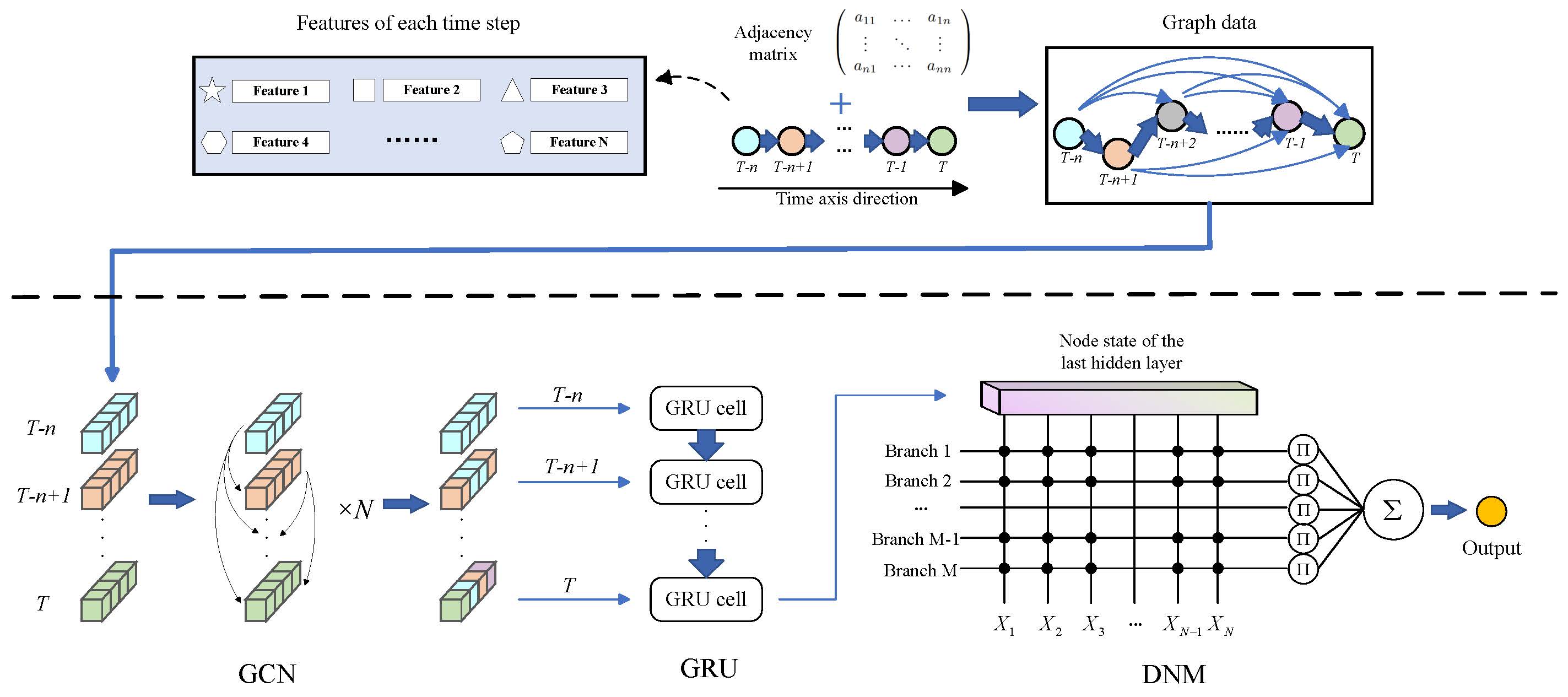

GGDNM: Short-Term Load Forecasting Based on Graph Convolution and Dendritic Deep Learning

This paper proposes GGDNM, a hybrid deep learning model combining Graph Convolutional Networks (GCN), Gated Recurrent Units (GRU), and a Dendritic Neuron Model (DNM) for short-term load forecasting. By modeling time-series load data as a graph, GGDNM captures spatial-temporal dependencies and leverages biological neuron-inspired nonlinearity. Experiments on national and regional datasets show GGDNM achieves superior accuracy and generalization over existing models.

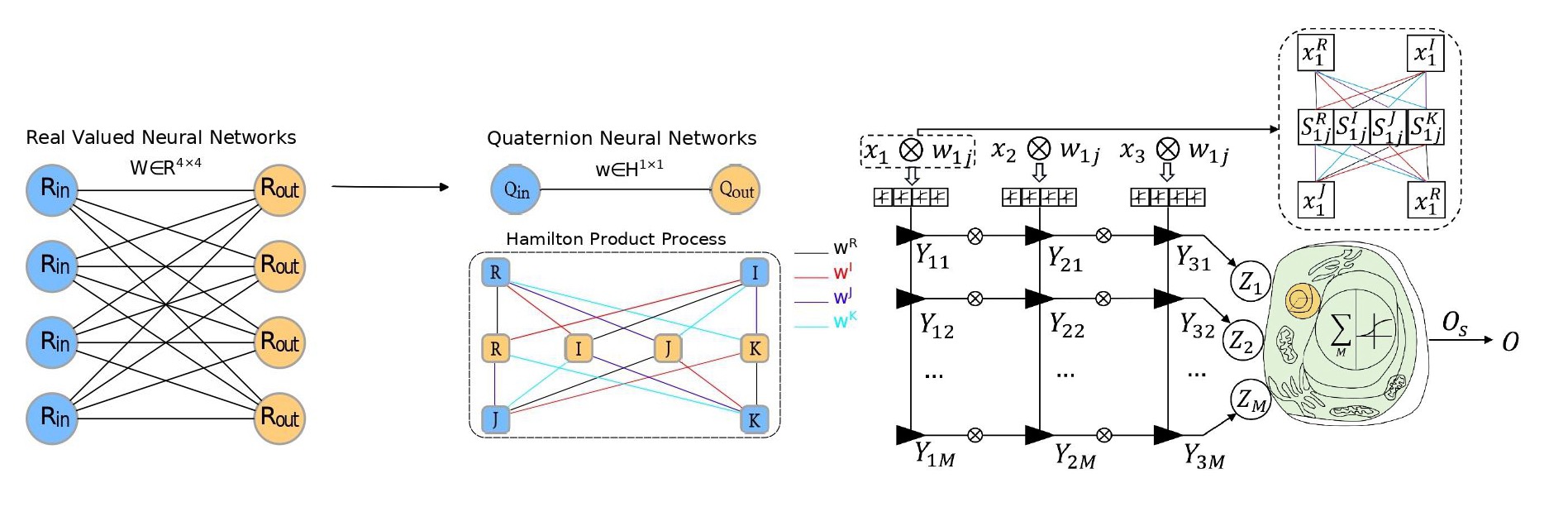

QDNM: Quaternion Dendritic Neuron Model for Multivariate Financial Time Series Prediction

Quaternion dendritic neuron model (QDNM) is a novel neuron model that can be applied to multivariate time-series prediction. It generalizes the original DNM model to the quaternion domain, combining the dendrite-like nonlinear calculation capabilities of DNMs with multidimensional feature integration and analysis capabilities of quaternion encoding to make the proposed model suitable to multivariate prediction tasks. And the BP algorithm for the model is derived in the framework of the quaternion algebra.

MDPN: A Lightweight Multi-Dendritic Pyramidal Neuron Model with Neural Plasticity on Image Recognition

MDPN is a lightweight neural network architecture that simulates the dendritic structure of pyramidal neurons to improve image representation, emphasizing nonlinear dendritic computation and neuroplasticity. In comparison with the traditional feedforward network model, MDPN focused on the importance of a single neuron and redefined the function of each subcomponent. Experimental results verify the effectiveness and robustness of our proposed MDPN on 16 standardized image datasets.

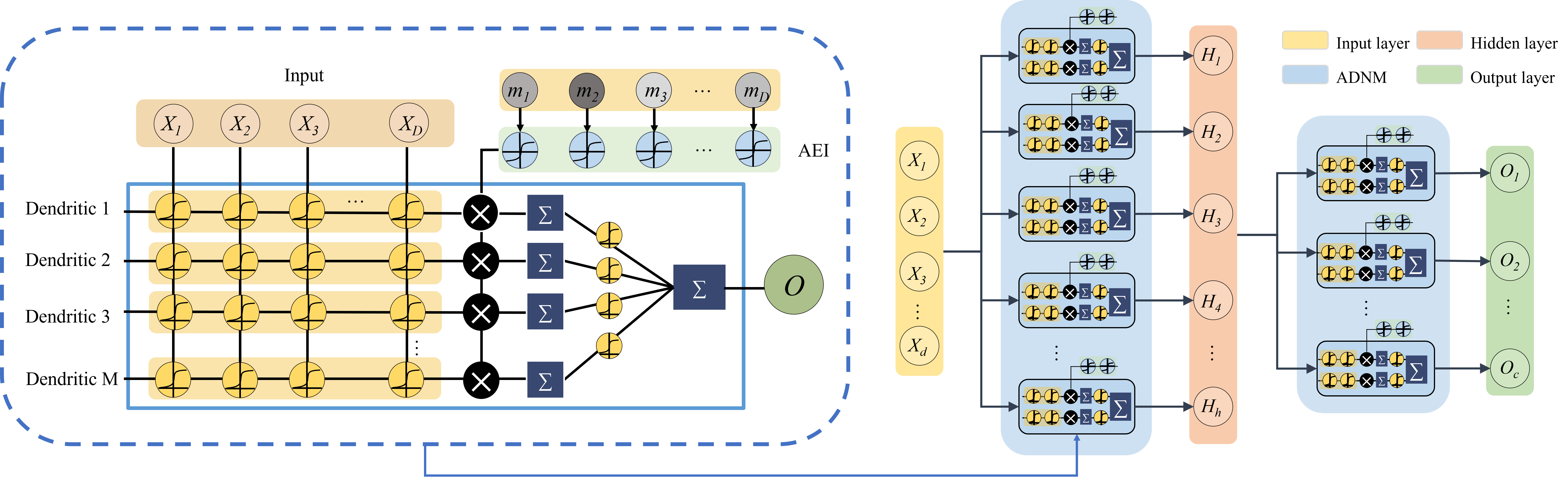

ADNS: Alternating Excitation-Inhibition Dendritic Computing for Classification

The ADNS is a novel neural network architecture that emulates the excitation and inhibition mechanisms found in biological neurons. This enhancement boosts the feature extraction and nonlinear computation capabilities of individual neurons, enabling them to construct multi-layer networks and making deep dendritic neuron models feasible. Experimental results show that our proposed ADNS significantly outperforms traditional models on 47 feature classification datasets and two image classification datasets, CIFAR-10 and CIFAR-100.

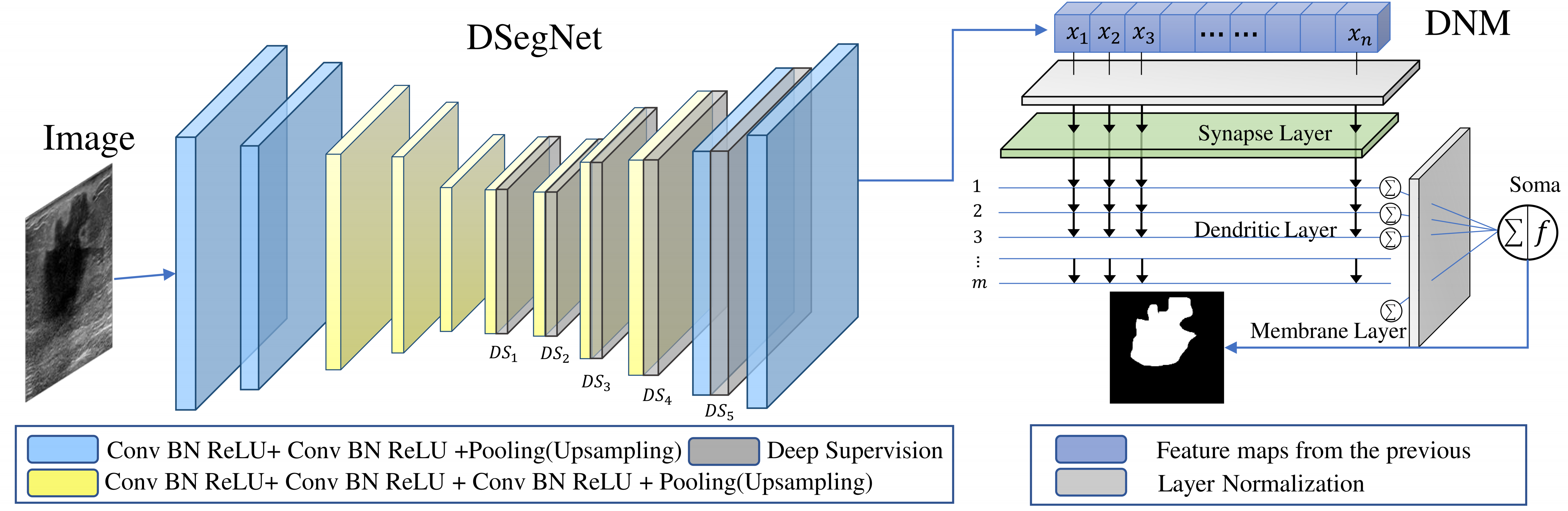

DDNet: Dendritic Deep Learning for Medical Segmentation

DDNet is a novel network that integrates biologically interpretable dendritic neurons and employs deep supervision during training to enhance the model's efficacy. To evaluate the effectiveness of the proposed methodology, comparative trials were conducted on datasets STU, Polyp, and DatasetB. The experiments demonstrate the superiority of the proposed approach.

DVT: Dendritic Learning-Incorporated Vision Transformer for Image Recognition

DVT is an innovative study introducing a Dendritic Learning-incorporated Vision Transformer, specifically designed for universal image recognition tasks inspired by dendritic neurons in neuroscience. The model's architecture incorporates highly biologically interpretable dendritic learning techniques, enabling DVT to excel in handling complex nonlinear classification problems.

The motivation behind DVT stems from the hypothesis that networks with high biological interpretability in architecture also exhibit superior performance in image recognition tasks. Our experimental results, as outlined in the associated paper, highlight the substantial improvement achieved by DVT compared to the current state-of-the-art methods on four general datasets.

CDNM: Fully Complex-valued Dendritic Neuron Model

In this article, we first extend DNM from a real-value domain to a complex-valued one. Performance of complex-valued DNM (CDNM) is evaluated through a complex XOR problem, a non-minimum phase equalization problem, and a real-world wind prediction task. Also, a comparative analysis on a set of elementary transcendental functions as an activation function is implemented and preparatory experiments are carried out for determining hyperparameters. The experimental results indicate that the proposed CDNM significantly outperforms real-valued DNM, complex-valued multi-layer perceptron, and other complex-valued neuron models.

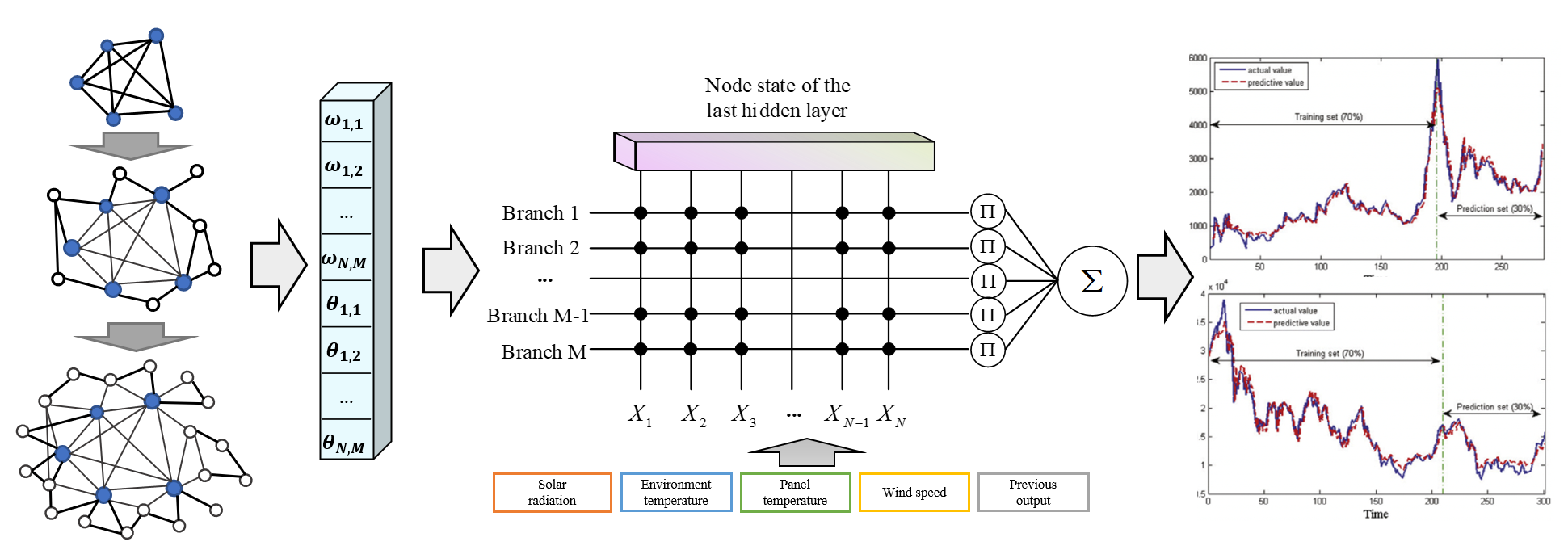

DSNDE: Improving Dendritic Neuron Model with Dynamic Scale-free Network-based Differential Evolution

This paper develops a dynamic scale-free network-based differential evolution algorithm (DSNDE) to train the dendritic neuron model (DNM). Through experiments on 14 benchmark datasets and a photovoltaic power forecasting problem, and compared with 9 meta-heuristic algorithms, the effectiveness of DSNDE is verified. The results show that DSNDE has better performance in most cases.

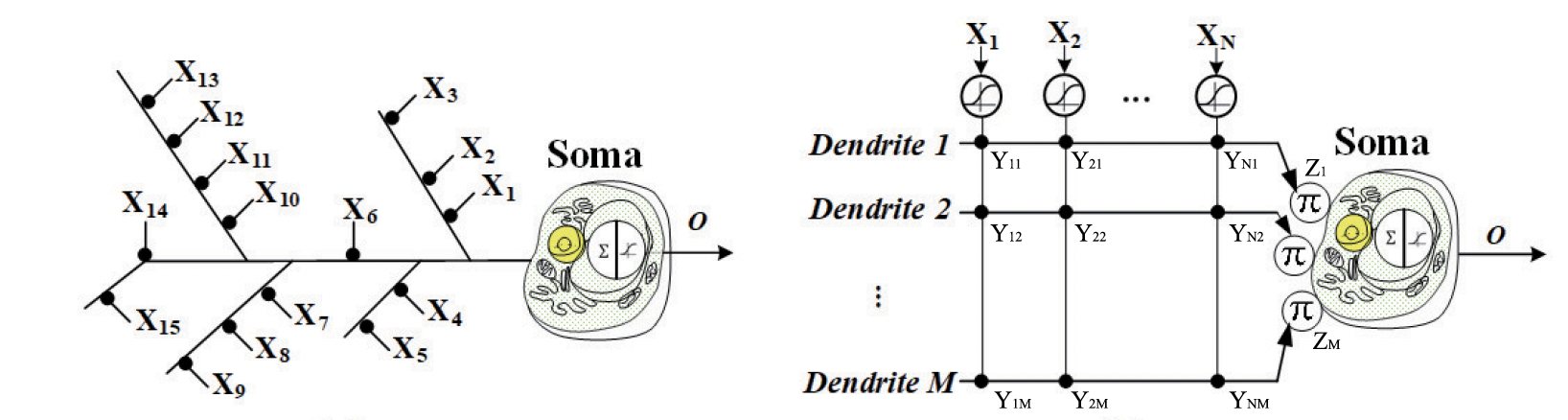

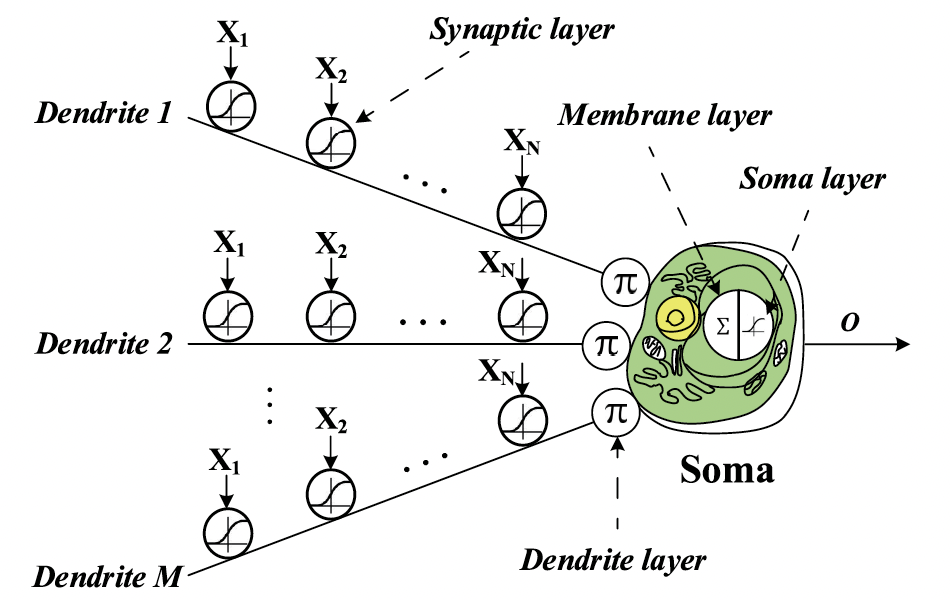

DNM: Dendritic Neuron Model with Effective Learning Algorithms for Classification, Approximation, and Prediction

We develop a new dendritic neuron model (DNM) by considering the nonlinearity of synapses, not only for a better understanding of a biological neuronal system but also for providing a more useful method to address the ANN's difficulties of understanding and training. To achieve its better performance for solving problems, six learning algorithms are for the first time used to train it. The experiments on 14 different problems involving classification, approximation, and prediction are conducted by using a multilayer perceptron and the proposed DNM. The results suggest that the proposed learning algorithms are effective and promising for training DNM and thus make DNM more powerful in solving classification, approximation, and prediction problems.